Biometric technologies and spatial computing—could enhanced communication between human and machine transform our spatial experiences beyond all recognition?

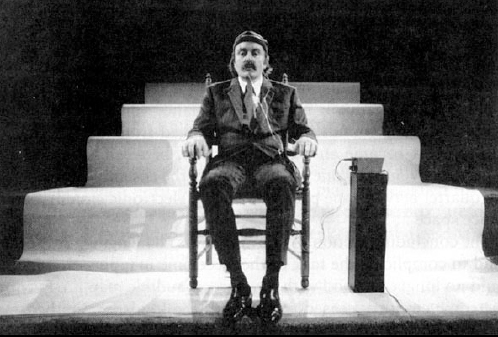

In 1965, American composer Alvin Lucier wrote the piece Music for Solo Performer. In its premiere, Lucier sat alone on stage in the Rose Art Museum, surrounded by percussion instruments that relayed the alpha-wave signals of his brain to the audience. All of it took place in an automated manner and real time, and thus, the performance marked the first use of a technology that later became known as the brain-computer interface.1 In the past 55 years, during which Lucier has repeatedly re-performed his work, devices controlled by brain signals have become increasingly commonplace in medicine and robotics, as well as game and user interface design. In the following, I will examine some possible applications of this technology in architecture and analyse its potential for improving user experiences.

Potential uses of brain-computer interfaces in smart spaces

Every day we come across increasingly many interactive spatial solutions, in which changes in space are measured by sensors, the resulting data is processed and then used to initiate appropriate operations. Most often, these changes consist in the movements of the user. Technically, however, the electronic response can be triggered by any given measure that is definable as data2—including a change in the bioelectrical activity of the user’s nervous system. The Internet of Things is constantly being supplemented with new objects, and brain-computer interfaces enable us to communicate with these objects in an increasingly direct and meaningful way. In addition to pressing buttons and stepping in front of sensors, we can now begin to operate in smart spaces by the means of brain signals. Such technologies have been used by people with motor disabilities already for a while now, enabling them to adjust their home appliances and lighting solutions by the power of thought.3 As these technologies develop, it will become possible to initiate interactive spatial solutions based on how the user feels.

Brain-computer interfaces usually measure brain signals with an electroencephalograph (EEG)4. The latter is an electrophysiological device that monitors cortical bioelectrical activity through electrodes attached to the scalp. EEG devices that allow participation in everyday activities have a smaller number of electrodes in order to facilitate their placement, but therefore also reduced precision. It has been established that such devices have an approximately 80% accuracy rate in issuing commands to a smart home.5 Thus, for healthy subjects, they present no significant advantage of use, whereas for subjects with physical disabilities, they present an opportunity to manage their daily routines independently. The devices used in case of severe disability and paralysis have a larger number of electrodes6, enabling the user to relay more complex commands and giving them control of neuroprostheses and speech synthesisers7. Consumer devices for ordinary users8 do not have that level of accuracy.

Better accuracy can be achieved by using extra-fine needle electrodes9 that are inserted directly into the brain for a more precise reading of the signal. A year ago, American entrepreneur and visionary Elon Musk showcased a brain-computer interface implant, the technology of which is based on inserting thousands of such electrodes into a human brain. Testing of Neuralink on human subjects is expected to begin this year. It has already been promised that this technology will eventually make it possible to perform all the operations on an iPhone by the power of thought. If this is indeed the case, we would be able to control the elements of a smart space as easily and dynamically as we habitually handle our smartphones. Smart space systems controlled by smart devices are hardly surprising to anyone these days, no more than sensor-operated lights or automatic doors. Perhaps in the future, then, it will be just as unsurprising when these or other operations are initiated by the power of thought, and the spatial environment functions as if it were a mind-controlled Internet of Things.

The human brain is characterised by neural plasticity, i.e., the ability to readapt biologically and attain new functions in response to changes in informational inputs. This makes it possible for the brain to learn how to process artificially mediated informational inputs from an implant or some other device in a meaningful way. Neuralink’s more ambitious goal is to integrate the human brain with artificial intelligence and to develop AI-enhanced cognition in humans10. In addition to the superhuman ability of, say, downloading new skills to one’s brain, this would in theory enable us to directly connect with other networked objects and beings11. Elon Musk has promised that in about ten years time, the technology of Neuralink will enable telepathic communication between humans. Given the materials released so far, this seems somewhat too optimistic12—yet, for the brain-computer interface enthusiasts, this has instilled a new hope of seeing scenarios familiar from the science fiction literature13 realised in their lifetime. Even though these kinds of promises should be regarded critically, Neuralink will undoubtedly bring along a level of accuracy so far unheard of in the field of brain-computer interfaces, as well as several new forms of interaction. Since it is being developed outside the academic and medical context, it will probably be more readily available to the consumers.

Space as a communicative agent

Commands in brain-computer interfaces are usually given through voluntary brain signals, the meanings of which first need to be taught to the interface. The interface is trained to recognise a given signal and translate it into a particular machine-executable command. However, brain-computer interfaces also enable to mediate intuitive commands, using the mental state discerned from the person’s EEG data to initiate an operation. A good example of such adaptive solutions can be found in computer games, where the course of the game can be redirected based on the reactions of the player—for example, when the activity level of the player drops and she needs to be slightly stimulated. A networked world makes it possible to use analogous solutions also in the physical space.

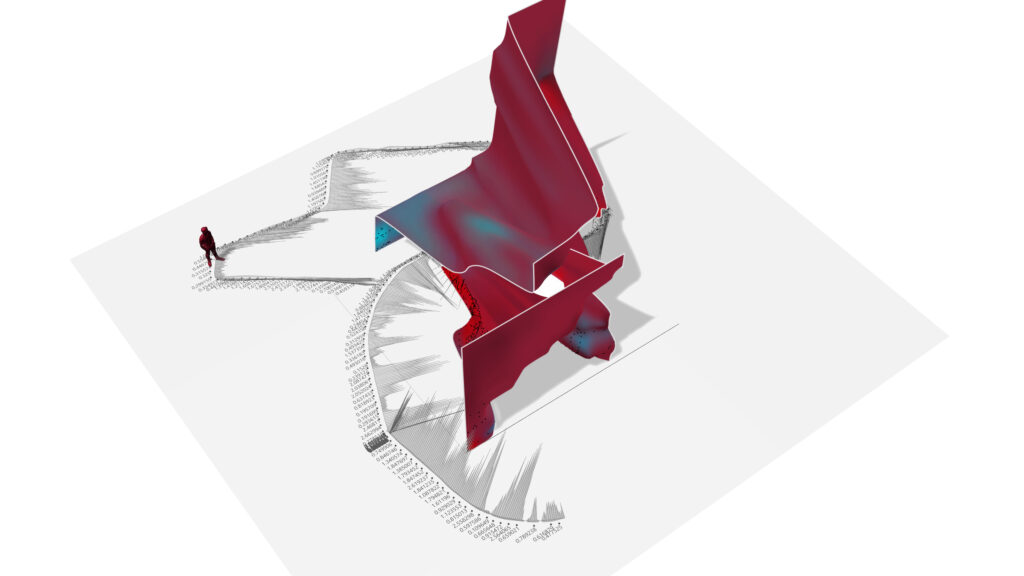

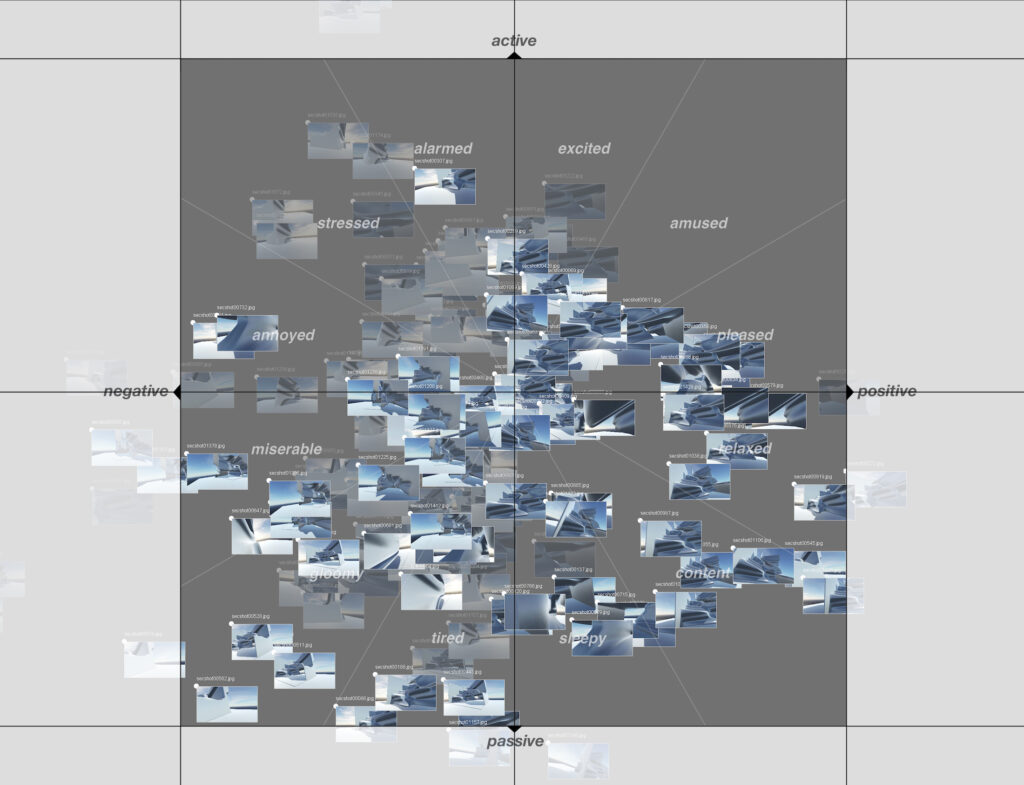

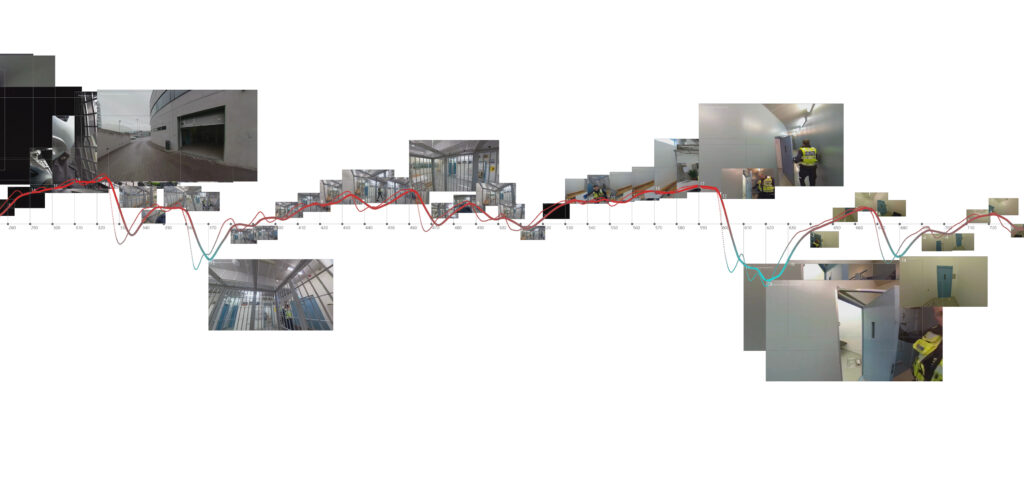

Mental states are most often identified by using the valence-arousal model, in which states are situated in a coordinate system of two axes — positive-negative (valence) and active-passive (arousal). Each sector of the coordinate system corresponds to a particular mental state14—the intersection of positive and active designates excitement, intersection of negative and passive designates anxiety, etc15. This gives us a description of mental states in terms of two numerical values, both of which can be computed from the EEG data. The values for arousal and valence are obtained by processing the data regarding the ratio of certain wave frequencies in the left and right frontal lobe16. The numerical values thereby obtained can be used as inputs in interactive spatial solutions, so that changes in the valence and arousal levels of the user lead to changes in the surrounding spatial environment. Space becomes a communicative agent that understands how its user feels and readjusts itself whenever the user needs to be stimulated.

Affective computing

The method described above also enables us to analyse the impact of space. Brain-computer interfaces grant us a new perspective on the spatial experiences and spatial preferences of the users. Analysing the connections between spatial stimuli and the mental states that they induce helps us to find new regularities in people’s spatial preferences and gives us knowledge about the actual range of mental states. With artificial intelligence, we can apply intuitive information processing akin to human perception, but apply it on an unfathomable amount of data, thus enabling the AI to learn to “understand” and “experience” affective phenomena through analysis. The branch of artificial intelligence involved in developing systems that recognise, interpret, process and simulate human feelings and emotions is called affective computing. One important application of affective computing is namely in enhancing the interaction between human and machine. This results in more dynamic user interfaces that no longer require the user to think like a machine.

By using machine-learning algorithms to identify various patterns in how space is used, a smart space can learn to anticipate its user’s wishes and readjust itself accordingly. It is capable of learning from its mistakes and memorising associations. Space can function as a search engine that knows the preferences of its user and offers feeling-pertinent solutions even before the user herself begins to miss them. This kind of shift in the interaction between space and its user gives new meaning to the concept of adaptive space and also a new role for the architect and designer. It puts the design of spatial experiences on wholly new foundations that we are currently only beginning to imagine. After all, a spatial experience is nothing but a product of processing sensory mediated electrochemical signals, and direct contact with the physical world nothing but an illusion created by the brain.17

Sensors in our pockets

Besides EEG, there are several alternative biometric technologies for evaluating user experience18, which enable to measure how the person feels in an almost unnoticeable manner. We carry these technologies around with us in our everyday devices, often without even knowing it. For example, photoplethysmographic (PPG) sensors built into Apple Watch and Samsung Galaxy devices measure the heart rate variability (HRV), from which it is possible to ascertain the positivity/negativity level of the mental state in case of stronger reactions. Even though we think that we use the apps on these devices only for monitoring our daily activity, we are potentially letting the developer know of changes in our mental states. By associating valence values with other available data flows, the developers are able to draw substantial conclusions about our preferences.

Widely used galvanic skin response (GSR) sensors can identify the general activity/passivity level of the person by measuring the activity of her sweat glands. The use of GSR sensors in special-purpose gaming mouses and controllers enables to redirect the course of the game according to the arousal level of the player. The player gets a thrilling gaming experience, while the developer gets important information about the effectiveness of different aspects of the game. In addition to such entertainment purposes, galvanic skin response measurement is also used in polygraphs. This gives an idea of the scope of biometric technologies, while also raising the question whether we really want to be constantly carrying around devices that have the capacity of a lie detector and that cannot be turned off. For the convenience offered by personalised applications, we are often willing to pay a rather high price without even acknowledging it—we are willing to share our personal data.

By combining HRV and GSR sensors, it is possible to measure the functioning of the sympathetic nervous system19 with open-source devices that cost merely around ten euros. Even if we avoid attaching any sensors to ourselves, affective computing algorithms can identify changes in our mental states by gathering data from the cameras, speakers and gyroscopes of our smart devices and analysing our manner of speaking, gestures, body language and facial expressions.

In addition to multimedia installations, such technologies are already being used in architecture as well. For example, the project “Reset”20 by UNStudio and Scape offered every visitor a personalised spatial experience based on their biometric data, with the purpose of alleviating work stress. Google Design Studio and Reddymade project „A Space for Being: Exploring Design’s Impact On Our Biology“21 presented three versions of a smart home, where biometric sensors were used to analyse which environment is the most comfortable one for the visitors. Every visitor received a report about her spatial experience on exit. Güvenc Özel’s kinetic installation „Cerebral Hut“ was unique in that it changed its physical form as a result of EEG data. These projects demonstrate a growing interest among architects in using new technologies to engage with the connections between properties of space and the affect of its users.

Summary

Brain-computer interfaces have several architectural applications in the form of enhancing user experience. Brain-computer interfaces can be used to study the impact of space in order to find new connections between different stylistic idioms and mental states. They can be used as a tool in the design process to test how the client reacts to a prospective design. Brain-computer interfaces can also be used in interactive spatial solutions to stimulate the feelings of the user according to the impact of the surrounding space. Various user interfaces and interactive solutions have already changed our use and cognition of space in significant ways. These interfaces are becoming ever smarter and thus, they are transforming how we relate to space ever more. As brain-computer interfaces become more widespread, we can begin to create experientially charged spaces that recognise the mental states of humans and readjust themselves accordingly. Perhaps there will be a day when we as architects will not be creating these environments ourselves, but simply let the artificial intelligence design us new experiences and spaces based on the mental states and behavioural patterns of the user groups.

When physicist Edmond Dewan offered composer Alvin Lucier the necessary technology for measuring the alpha frequency of brain waves22, Lucier was first and foremost fascinated by the challenge of composing a musical work as if without even thinking of it. Namely, the low-frequency brain waves with which the low percussion sounds were set to go off occur only in a relaxed state, i.e., when the mind is at rest. Thus, the concert performance did not consist in putting forth a random train of thought. In order to produce the sounds, the composer had to switch himself off completely from any stage fear and everything else disruptive, and achieve an immediate mental state, which was then helped to maintain by the low sounds of the percussion instruments. In order to give a command, he had to focus on not giving any commands. The use of a brain-computer interface in Lucier’s work was special in that the feedback loop and direct interaction served a clear aesthetic purpose. These principles are still central in working with brain-computer interfaces today. Enhanced communication between the brain and computer will make it possible to create adaptive spaces that quietly enhance our spatial experiences.

JOHANNA JÕEKALDA is an architect and head of the Estonian Academy of Arts VR Lab. Her works focus on the forms of interaction between physical and virtual space, mainly by the means of experimenting with installations.

HEADER: Measuring GSR and HRV data at the VR Lab at EAA in the described experiment. Photo: Johanna Jõekalda

PUBLISHED: Maja 101-102 (summer-autumn 2020) Interior Design

1 The term was coined by Jacques Vidal—the engineer who, already in the 1970, arrived at a technical solution that enabled to move a cursor on a monitor by the means of brain signals.

2 Parties of an interaction can easily be replaced, as long as they are formally definable as data. According to media theorist Lev Manovich’s account, this is made possible by the language of new media. The development of desktop computers meant that images, sounds, texts as well as other forms of media became quantifiable—thus enabling intermedial translation, e.g., translation of sounds into images or brain signals into machine-executable commands.

3 E.g., Domus Smart Home platform.

4 In medical research, another technique for measuring brain activity is functional magnetic resonance imaging (fMRI), which enables to identify subjects’ reactions and states in a more precise way. The fMRI device, however, is stationary—the subject is enclosed inside the scanner for the period of measurement, where she can experience only mediated stimuli (on screen; through speakers). Limitations on natural spatial perception thus nullify the technological advantages of fMRI over biometric devices that enable the user to move freely in the surrounding space and look around.

5 These statistics are drawn from research with the Domus Smart Home platform, more specifically the Konnex home automation protocol. The users were asked to switch on the lights, use the coffee machine, move the shutters and watch TV.

Nataliya Kosmyna et al, „Feasibility of BCI Control in a Realistic Smart Home Environment“ https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4999433/#fn0001.

6 Approximately 64–256 electrodes

7 Elon Musk & Neuralink „An Integrated Brain-Machine Interface Platform with Thousands of Channels“, https://www.biorxiv.org/content/10.1101/703801v4.full.pdf

8 The best-known one (and first to enter the consumer market) is Emotiv Epoc, which has a consumer-friendly interface, but very noisy EEG signal.

9 Even though invasive electrodes can achieve a very precise signal, they pose a risk of scar tissue, which in turn could cause the signal to weaken and disappear.

10 Matthew Nagle. 2006. Braingate – the first time when a cursor was moved by using an implant.

11 In addition to sending out signals, electrodes can also receive signals.

12 The article was written before the 28 August 2020 Neuralink presentation.

13 For example, the characters in William Gibson’s Neuromancer have brain implants that enable them to connect directly with a computer network.

14 D. Oude Bos „EEG-Based Emotion Recognition (The Influence of Visual and Auditory Stimuli)“, http://hmi.ewi.utwente.nl/verslagen/capita-selecta/CS-Oude_Bos-Danny.pdf

15 Positivity/negativity (i.e., valence) of the reaction is evaluated by looking at the activity of the left and right frontal lobes. Activity/passivity (i.e., arousal level) of the reaction is established by looking at the ratio of beta and alpha wave patterns.

16 In order to work out these values, one begins by extracting the α- and β-wave frequencies from the electrodes of interest, then ascertains how these frequencies change in time compared to the base level and finds out the average value for every unit of time, and finally uses these to calculate the ratio of α- and β-wave frequencies, which reflects the activity/passivity level, and the ratio between the activity of the left and right frontal lobe, which reflects the positivity/negativity level. In the course of data processing, extrema are removed from the dataset, abrupt changes in the signal are interpolated and fixed-frequency data is reduced to the desired level of temporal precision. Automated data processing enables to do this in real time. Individual experiences are rendered comparable by using reference stimuli to identify the affective base level and extrema of each individual and calibrate the parameters accordingly. Reference stimuli could be images from the IAPS database, or, alternatively, any sensory stimuli with established average valence-arousal values.

17 C. Frith, Making Up the Mind: How the Brain Creates Our Mental World. Oxford: Blackwell, 2007, pp. 40.

18 Biometrics is the use of mathematical statistics in studying the nervous system and other biological systems.

19 In case of danger, blood pressure and heart rate will increase, and the person has to make an instinctive decision on whether to flee or fight.

20 Presented at the 2017 Salone del Mobile in Milano

21 Presented at the 2019 Milano furniture exhibition

22 Edmond Dewan, an engineer and scientist working for the U.S. Air Force, had by that point already managed to switch on a light by the power of thought, and had used this to transmit a message in morse code, generating the sentence “I CAN TALK” letter by letter with alpha frequency, each letter taking 25 seconds to generate.